Avoid These Top 10 Hadoop Data Migration Traps

By Tony Velcich & Steve Kilgore

Dec 17, 2020

Avoid These Top 10 Hadoop Data Migration Traps

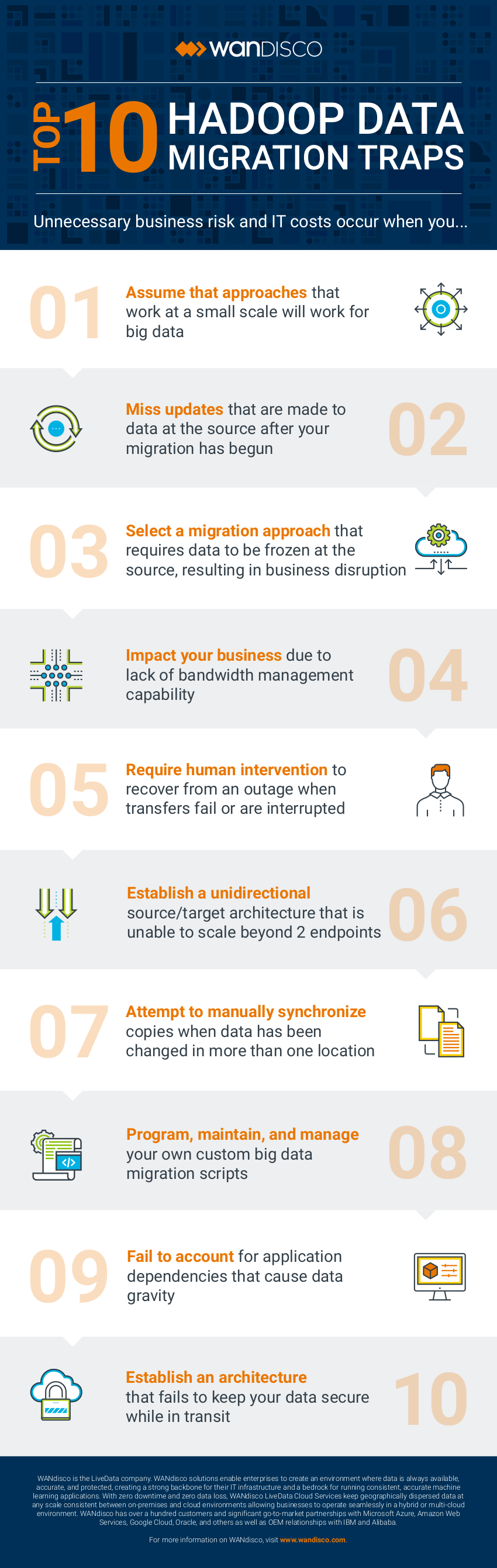

Organizations are modernizing their data infrastructures, which often includes migrating their on-premises Hadoop data to the cloud. WANdisco has worked with many organizations on their successful cloud data migration projects. We’ve also witnessed organizations attempt their own manual migration initiatives or try to leverage legacy migration approaches, both of which can be riddled with traps. This document summarizes the top 10 data migration traps that we have seen and helped organizations overcome.

Trap #1: Assume that approaches that work at a small scale will work for big data

There are a number of ways to transfer small amounts of data to the cloud, particularly if that data is static and unchanging. The danger lies in assuming that the same approaches will work when you’ve got a large volume of data, especially when that data is changing while you are in the process of moving it to the cloud.

If the data set is large and static It's a matter of having enough time and enough bandwidth to migrate the data, or enough time to load it onto a bulk transfer device, such as AWS Snowball or Azure Data Box, and have that device shipped to the cloud service provider and then uploaded.

The real challenge arises when you’re talking about a large volume of data and that data is actively changing while you're in the process of moving it. In this case the approaches that may work on small data sets will not work effectively and often lead to failed data migration projects. Furthermore, this first trap often also results in the next two traps as described below.

Trap #2: Miss updates that are made to data at the source after your migration has begun

When you need to migrate data that is actively changing—either new data is being ingested, or existing data is being updated or deleted—you’ve got a choice to make. You can either freeze the data at the source until the migration is complete (see #3 below), or allow the data to continue to change at the source, in which case you need to figure out how to take into account all those changes so when the migration is complete you don’t end up with a copy that's already badly out of date.

To prevent data inconsistencies between source and target, you need a way to identify and migrate any changes that may have occurred. The typical approach is to perform multiple iterations to rescan the data set and catch changes since the last iteration. This method allows you to iteratively approach a consistent state. However, if you've got a big enough volume of data and it's changing frequently it may be impossible to ever catch up with the changes being made. This is a fairly complicated problem and many times people don't really anticipate the full impact it will have on their resources and business.

Trap #3: Select a migration approach that requires data to be frozen at the source, resulting in business disruption

The other option you can choose in order to make the migration process simpler is to freeze the data at the source in order to prevent any changes from occurring. This certainly makes the migration task a lot simpler. With this approach, you can be confident that the data copy you made to upload to the new location—whether over a network connection or via a bulk transfer device—is consistent with what exists at the source because there weren't any changes allowed during the migration process.

The problem with this approach is that it requires system downtime and results in disruption to your business. These systems are almost always business critical, and to try and bring them down or freeze them for an extended period of time usually isn’t acceptable to the business processes that rely on them. Using a bulk transfer device, it can take days to weeks to perform the transfer. If you transfer data over a dedicated network connection, it will depend on the network bandwidth you have available. In order to move a petabyte of data over a one-gigabit link, it will take over 90 days. For the vast majority of organizations, days, weeks, or months of downtime and business disruption is just not acceptable.

Trap #4: Impact your business due to lack of bandwidth management capability

Companies that choose to transfer data across the network often fail to take into account all of the other business processes that are using that same network for their day-to-day operations. Even if you have a dedicated network channel this needs to be factored in since you usually can’t utilize all of the bandwidth for the migration without impacting other users and processes.

It is important to have a mechanism in place to ensure you can throttle the data being migrated so that you don't introduce a negative business impact. In many instances, we have seen companies turn on the faucet and start moving data and wind up saturating the pipe and impact other parts of the business. They then need to shut off the migration and restart it at the end of the business day. This leads to trap number five given the interruption effectively introduces an outage in the process of your migration.

Trap #5: Require human intervention to recover from an outage when transfers fail or are interrupted

If you stopped the migration or incurred an outage, how do you figure out the point from which you recover to know exactly how much of that data has been correctly migrated? Depending on the tools you’re using, will it even be possible to resume from that point, or will you effectively have to start the process over from the beginning? It’s a complex problem and utilizing a manual process adds significant risk and costs when you have to unexpectedly interrupt and resume the migration.

On the other hand, having a process that can manage interruption and resumption of data transfer automatically greatly reduces the business risks involved, and is essential to a successful data migration project.

Trap #6: Establish a unidirectional source/target architecture that is unable to scale beyond 2 endpoints

The use of hybrid cloud deployments is increasingly popular. That may entail the use of a public cloud environment together with a private cloud or an organization’s traditional on-premises infrastructure. For a true hybrid cloud, scenario changes need to be able to occur in any location, and the change needs to be propagated to the other system. Approaches that only account for unidirectional data movement do not support true hybrid cloud scenarios because they require a source/target relationship.

This is further complicated when you go beyond just two endpoints. We are seeing more and more distributed environments where there isn’t just one source and a destination, but multiple cloud regions for redundancy purposes or even across multiple cloud providers.

In order to avoid locking yourself into a single point solution, you need to be able to manage live data across multiple endpoints. In this case, you need a solution that can replicate changes across multiple environments and resolve any potential data change conflicts, preferably before they arise.

Trap #7: Attempt to manually synchronize copies when data has been changed in more than one location

Any attempt to manually synchronize data is resource intensive, costly and error prone. It is difficult trying to do this manually across two environments, and significantly more complicated if attempted across multiple environments. These scenarios absolutely require an automated approach to replicate data across many environments and provide a mechanism to ensure the data is kept consistent across all of them.

Trap #8: Program, maintain, and manage your own custom big data migration scripts

Organizations with deep technical expertise in Hadoop will be familiar with DistCp (distributed copy), and often want to leverage this free open source tool to develop their own custom migration scripts. However, DistCp was designed for inter/intra-cluster copying, and not for large scale data migrations. DistCp only supports unidirectional data copying for a specific point-in-time. It does not cater to changing data and requires multiple scans of the source in order to pick up changes made between each run. These restrictions introduce many of the issues discussed above, which as we’ve discussed, can be very complex problems. Organizations are much better off utilizing their valuable resources on development and innovations using the new cloud environment rather than building their own migration solutions.

Trap #9: Fail to account for application dependencies that cause data gravity

Data gravity is an interesting concept. It refers to the ability for data to attract applications, services, and other data. The greater the amount of data the greater the force (gravity) it has to attract more applications and services. Data gravity also often drives dependencies between applications.

For example, you might have one application that takes the output from another application as its input that may in turn feed other applications that are further downstream. If you talk to a business unit or user who designed a given application, they're going to know what their inputs are, but they may or may not be aware of everyone that is using the data that they have created. It becomes very easy to miss a dependency. And then what happens when you move that application into the cloud? Now if the data it generates is not being synchronized back down to the on-premises environment suddenly the other applications further down the workflow aren’t getting current data.

This further emphasizes the need for a solution that supports bi-directional or ideally multi-directional replication.

Trap #10: Establish an architecture that fails to keep your data secure while in transit

Data security is essential. Concerns about cloud data security is one of the items that has slowed cloud adoption across certain industries such as financial services and banking. While cloud adoption has now seen a significant increase across all industries, security remains critically important. Information security teams often feel uncomfortable letting critical customer data outside of their own four walls. It's happening, but they have to be satisfied that it's happening in a secure manner. This includes ensuring the data is secure while it is in transit during the migration process.

Having a dedicated network channel that you transfer your data over not only helps with the bandwidth issues discussed previously, it also decreases that potential attack surface for someone to get access to your data while it's in transit. Data should also always be encrypted when being transferred over that pipe. Ensuring your solution supports the standard encryption models such as SSL, Kerberos, etc. is another important requirement.

WANdisco LiveData Strategy

An automated data migration solution that enables users to avoid the traps outlined above will significantly reduce the time, cost, and risk associated with large scale data lake migrations to the cloud.

WANdisco provides a solution promoting a LiveData strategy. Utilizing a LiveData strategy, migrations can be performed without requiring any application changes, downtime, or business disruption even while data sets are under active change. WANdisco’s LiveData capabilities perform migrations of any scale with a single pass of the source data while supporting continuous replication of ongoing changes. Furthermore, WANdisco’s patented Distributed Coordination Engine enables active-active replication to support true hybrid cloud or multi cloud implementations and ensure 100% data consistency across multiple distributed environments.

For more information go to https://wandisco.com/products.

Authors

Steve Kilgore, Vice President, Field Technical Operations at WANdisco

Steve manages WANdisco’s global Solutions Architecture and Partner technical teams. He has over 30 years of industry experience including stints at Sun Microsystems and Oracle, as well as running his own own successful consulting company for over 16 years, serving as a trusted advisor to Fortune 500 companies such as Fannie Mae, Freddie Mac, Charles Schwab, Delta Airlines, and AT&T. A man of many interests, Steve is also a Master Scuba Diver with over 1000 dives, a veteran of 4 Himalayan treks as well as several mountaineering expeditions in the Rockies and Andes, and is also a certified Thai Chef."

Tony Velcich, Sr. Director of Product Marketing at WANdisco

Tony is an accomplished product management and marketing leader with over 25 years of experience in the software industry. Tony is currently responsible for product marketing at WANdisco, helping to drive go-to-market strategy, content and activities. Tony has a strong background in data management having worked at leading database companies including Oracle, Informix and TimesTen where he led strategy for areas such as big data analytics for the telecommunications industry, sales force automation, as well as sales and customer experience analytics.