A glimpse into blockchain's future

The Hitchhiker’s Guide to Blockchains: A Trust Based Taxonomy

Abstract

A trust based taxonomy of blockchains is presented. We consider the evolution of trust and draw parallels to significant societal developments in which information technology tools played a key role. This approach permits us to understand the origins of blockchains, the excitement that currently permeates this space and the promise this technology holds for the future. Besides providing an up-to-date literature survey, we take a critical look at the architectural elements of public and private blockchains and discuss various trade-offs. In addition to demystifying the technology behind blockchains, we demonstrate that not all pieces of the blockchain puzzle are created equal. In particular, we show that consensus mechanisms play a vital role in developing highly available distributed solutions and argue that given that foundation, building distributed applications becomes extremely easy. This argument is supported by sketching the construction of a serverless gold exchange. It is also shown that the barrier to entry for consensus mechanisms is very high. After emphasizing the importance of having the correct consensus mechanism, we offer some guidelines that help in picking from a dizzying array of choices. The paper is concluded by discussing possible topics for future research.

Introduction

Many surveys and white papers have been written on blockchains [3, 48]. Why another? Previous surveys either compared the performance of blockchains against that of databases [49], alluded to potential new applications [50,76], considered their use in enterprises or just simply surveyed the related work [68]. In addition to providing an up-to-date literature survey, the material presented here goes beyond the known results in the following ways:

- We approach this survey through the lens of trust, drawing parallels between the distributed technology developments and the historical shifts in trust from a sociological standpoint. Approaching the taxonomy with this interdisciplinary perspective gives unique insights into how this technology might mature.

- Most descriptions of public blockchains only explain how they work [101, 109], but do not emphasize the reasons behind design choices. We focus on the latter, with the intent of demonstrating that those early architectural decisions have irreversible implications. This knowledge allows us to compare various choices available within this space and discuss the suitability of each for a given application.

- An equally important reason for this survey is to demystify the technology behind blockchains and to demonstrate that not all pieces of the blockchain puzzle are created equal. In particular, we show that consensus mechanisms play a vital role and the choice of which one is used can make or break a solution, and give guidelines that help navigate through the maze of many competing choices.

Trust

Never depend upon institutions or government to solve any problem. All social movements are founded by, guided by, motivated and seen through by the passion of individuals.

(Margaret Mead)

Societal Trust

It has been said that in recent times that there is a “crisis of trust.” How did we get there? First let us define the term trust. For human society to function and operate smoothly, cooperation and interdependence among its members are two key prerequisites. Trust is at the core of these and other such prerequisites; no society can exist and run efficiently without it. Unfortunately, of late, trust in institutions such as banks, corporations, governments, religious organizations, and media has hit an all time low. Recent scandals have only exacerbated the problem. The decision making authority that is concentrated within the hands of a privileged few is deemed unacceptable. This does not mean that we have become a trustless society. Quite the contrary - the rising popularity of sharing economy platforms, such as AirBnb and Uber, is clear evidence that people are willing to share their house with unknown guests for a fee or jump into a stranger’s car for a ride. The process of building trust and whom we deem trustworthy seems to have undergone dramatic changes.

According to Botsman [29, 32], trust used to be very local - extended only to people within the immediate circle, like friends and relatives. Then it gradually became centralized, with institutions as the key trust brokers. For example, money lending for the most part moved from the neighborhood lender to big banks which in turn rely on large, national credit bureaus. This centralized trust, as argued by Botsman, is giving way to distributed trust, and the advances in digital technology are the driving force behind this revolution.

Is this the end of trust in organizations? Not really. El-Erian [51] posits that credible, effective institutions are fundamental to society, in that they help shield countries from costly shocks resulting from economic, political or social volatility. Imagine a massive sell off of a cryptocurrency based on a rumor. In the absence of institutions, there would be no deposit insurance mandated by governments, the equivalent of the Federal Deposit Insurance Act of the US, to allay the fears of panicked sellers during a bank run. A contrarian viewpoint to the trustless model is also offered by Stinchcombe [99]. The future, therefore, might hold some combination of the two viewpoints: 1) For institutions, it is no longer business as usual and they would be compelled to embrace, whether willingly or not, emerging technologies and pass on the benefits of the resulting efficiencies to their clients, and 2) Trust would take some hybrid form, neither fully centralized nor distributed. Exactly how it is going to evolve and what form it takes is anyone’s guess at this point. The next section offers further evidence in support of this argument, why a more hybrid, decentralized form of trust is likely to evolve.

Subversive Technologies

Until a few decades ago, cryptography was primarily a military tool. Two developments helped bring it into the public domain: the invention of symmetric and asymmetric encryption (private-key and public-key cryptography). It was successfully used in the protection of human rights against oppressive regimes [63]. More recently, social media played an important role in the Arab Spring.

Chaum’s pioneering work to protect digital privacy dates back to the early 1980s [42]. He also introduced the notion of distributed untraceable transactions in [40,41] more than thirty years ago. A comprehensive historical perspective on commercial development of this technology that ultimately led up to the advent of current day cryptocurrencies can be found in [88].The infamous file sharing system Napster, that came into existence in 1999, similarly challenged the entrenched power structure that existed in the content distribution aspect of the entertainment industry. While this entity has been legally shut down, file sharing has moved to mostly serverless platforms such as Bittorrent (trackers are still centralized and prone to censorship). Bittorrent and other file-sharing applications during the peak of their popularity in 2008 consumed bandwidth similar to major content providers of today like Netflix. According to one study [2], file-sharing accounted for almost 70% of all Internet traffic. Though one could argue that file-sharing applications could serve to distribute legitimate content, the over2 whelming majority of the traffic, well over 90%, is illegal [43]. By 2015, this number dropped to a mere 3% [21]. This decline in its popularity could be attributed to the rise of legitimate platforms that make content available at an acceptable cost and stricter copyright laws.

Approximately a decade after the birth of Napster, when file-sharing was riding high in popularity, bitcoin was invented [87]. It came at a time when there was utter disappointment with corruption in financial institutions and the governments that propped them up. Indeed, the genesis block of the bitcoin ledger contained the headline from The Financial Times dated January 3rd, 2009 that refers to a second bailout for banks, expressing Nakamoto’s disillusionment with the status quo of the time.

The motivations behind the invention of peer-to-peer file sharing platforms and that of cryptocurrencies is similar - to disrupt the status quo. Given this backdrop, it is tempting to draw parallels between these two systems: both share clever, serverless implementations for the most part, both can be used for legitimate as well as illegitimate purposes, and both drew attention from the academia, governments and regulatory agencies. The rise and fall of file-sharing platforms and the increasing dominance of legitimate, affordable content providers that are displacing them could serve as a harbinger of things to come for the financial sector. By yielding some control back to the consumer by adapting the emerging distributed technologies, traditional financial institutions could provide services that are more competitive and win back the confidence of their consumers.

What’s Next?

Some notable digital trends include distributed, community driven ranking systems, such as the reputation score earned on Q&A forums like StackOverflow or personal ratings on sharing-economy platforms like AirBnb and Uber. There are discussions around maintaining sovereignty over personal data in social networking sites after a serious data breach at Facebook. Surprisingly, China is going exactly in the opposite direction, much to the dismay of privacy advocates. It is considering developing a Social Credit System, where each citizen is assigned a score that not only includes that person’s history of paying bills on time but also daily activities, including shopping habits, exercise routines, social interactions, and the amount of time spent online [29].

Ledgers have undergone significant evolution starting with a paper-based double entry general ledger. During the early days of personal computers, software such as Quickbooks helped digitize the accounting process. With the advent of blockchains, the ledgers would most likely become distributed, in order to meet the increased customer expectations of auditability and transparency. Blockchain developments have captured the imagination of people from all walks of life. Ironically, significant investment to innovate in this space is coming from traditional institutions, e.g. academia, government and Big Tech, the very establishments that disruptors would like to overthrow.

It is natural to wonder, given all the benefits distributed, decentralized technology seem to offer, why wasn’t it the first choice when transitioning from mainframes to the networked world? Why the circuitous path via client-server computing? Simple: Centralized solutions are easy to implement compared to their distributed counterparts. In fact, a good principle when designing a distributed solution is to first design and implement a centralized one, verify correct operation, and finally adapt it to the distributed model. The next section gives a more detailed explanation of these paradigm shifts in computing.

Parallels in the Digital Realm

In this section, we consider the local, centralized and distributed trust models and the corresponding computing paradigms with the goal of drawing out the parallels between the two.

Pre-Networked Era

Local trust corresponds to the pre-networked era of computing - the time of mainframes. These machines lacked connectivity to the outside world. The enterprise which operated the mainframe had to hire operators who sat at terminals and communicated with the enterprise’s clients by telephone. The enterprise trusted the computer operators it hired. Accounting in commerce remained manual up until the mid seventies. Trade with remote vendors began with mail order catalogs and the use of the telephone with trust between the vendor and customer. The merchant placed trust in the client for payment, and the merchant is expected to deliver the correct merchandise. If either party betrays this trust, there are institutions in place to punish this kind of behavior, e.g. Better-Business Bureau for customers to report their negative experience and credit-rating agencies to protect businesses from customers who defraud them. These institutions are in active use today.

Trusted-Third Party (TTP)

The client-server paradigm resembles the trusted-third party (TTP) model. The advent of the WorldWide Web and the age of networked computing introduced the notion of electronic commerce in the 1990s. Servers enabled customers to interact directly by giving them full access to the shopping catalog and allowing them place orders, thus disintermediating the order-processing clerks. While doing so, they recognized and successfully implemented a key security & privacy requirement: customer isolation, i.e. insulating and concealing the activities of one user from another. This style of client-server computing roughly corresponds to the centralized trust model, in that clients fully trust the server. Even today, this model reigns supreme and the world’s richest individual at the time of this writing, by a very wide margin, is the founder of Amazon. Crash resistance and high availability is achieved by heavily provisioning the server and fortifying the servers against attacks for security. If a user places an order and disavows having ever placed the order after receiving the goods, the vendor can simply request that the goods be returned; if the customer does not comply, the vendor can write it off as the cost of doing business and sever the ties with that customer, report him to credit bureaus, the authorities, or any combination thereof; a weak form of non-repudiation. In the following we show a real-life example of TTP and suggest that the third party need not always be trustworthy.

Example: Untrustworthy Third Party Consider a real-estate transaction in the US where Alice wants to buy a house from Bob. The current practice that is common in most jurisdictions is for Alice to hire buyer’s agent Barry, who is familiar with the local area where the property to be purchased is located, and Bob to list the house using a seller’s agent Sam, who has a good sense of the market pulse. Generally speaking, the buyer, Alice in this case, cannot come up with the money required, so she seeks the help of a lender Larry. These three intermediaries Barry, Sam and Larry become stakeholders in the transaction, in that it is in their interest to make sure that the transaction executes to completion; otherwise, none of them get paid. There are other intermediaries such as inspectors that come into play, but for the sake of simplicity let us keep them out of our discussion. Given that no one trusts anyone in this kind of a transaction, they all agree to hire a closing agent Charlie, a TTP to facilitate the transaction. Typically it is the buyer’s prerogative to pick the closing agent. In reality, for practical reasons, the buyer delegates this responsibility to Barry, assuming that Barry would not betray his trust. This puts Barry in a position of power. Barry gets to pick Charlie and can even pick the inspector. It is important to note that Barry benefits most by quickly closing the deal, collecting his commission and moving on to the next buyer. Charlie and Larry would like to get repeat business from Barry, so they would not do anything to jeopardize the transaction. Bob and Sam, of course, would like get rid of the house so they also will not do anything to rock the boat. In other words, no one is really looking out for Alice. The system is stacked against a novice buyer who is not really protected from potential harm that could come from an unscrupulous buyer’s agent. The real-estate industry is notorious for attracting people with questionable ethics and buyers unfortunately are taken advantage of routinely. Clearly, the trust model is broken here. Why is this practice still in use today? Because of its simplicity for a high-valued, complex transaction like a house purchase. There are two main, orthogonal drawbacks with TTP solutions:

- Drawback 1: Single-Point of Failure The notion of a central authority facilitating client transactions, like the server in the client-server model, presents itself as an inadequate solution in fault-tolerant computing because it leads to a single-point of failure, namely if the server fails, the whole systems comes to a grinding halt. Even if a select set of privileged nodes collectively take on the role of a server, while it might eliminate a single-point of failure, they nevertheless become an attractive target for attackers.

- Drawback 2: Untrustworthy Server In information security, it has long been recognized that TTP offers simple solutions that are secure, but they come at the cost of making a big leap of trust. But as the above example illustrates, this maybe misplaced trust. Blockchain enthusiasts rightfully reject the client-server (centralized) model on several grounds, chiefly taking issue with the concentration of power, citing that TTP is vested with too much authority, imposes unreasonable fees to mediate, and introduces avoidable delays in settlement.

The next section describes how two different research communities sought to address the aforementioned drawbacks and arrived at broadly similar, but very different distributed architectures.

Leveling the Playing Field

The fault-tolerant computing community addressed Drawback 1 from Section 3.2 by replicating the application state across several servers, thus assuring high availability and guaranteeing progress as long as a majority of the servers are functional. The replication of state is achieved by following an algorithmic process, the so-called consensus protocol. The challenge is in maintaining the replicated state in the face of arbitrary failures. More details on consensus are given in Section 7. Two points about this approach are noteworthy: Though all servers are equal peers, there is still a distinction between clients and servers in contrast to the peer-to-peer computing model where each node is running client as well as server software, and secondly to arrive at consensus, server nodes should trust each other to follow an algorithmic process.

Cryptocurrency proponents, on the other hand, arrived at a solution in dealing with Drawback 2 by eliminating trust altogether. In this, all nodes are equal - a true form of peer-to-peer computing. More importantly, because of the absence of trust, nodes cannot rely on each other to follow an algorithm. But, nevertheless, nodes must collectively agree on the state of the global data structures. This is achieved by rewarding nodes with incentives for correct behavior. This alternate method of achieving consensus can only give probabilistic guarantees. More details on this method are given in the next section.

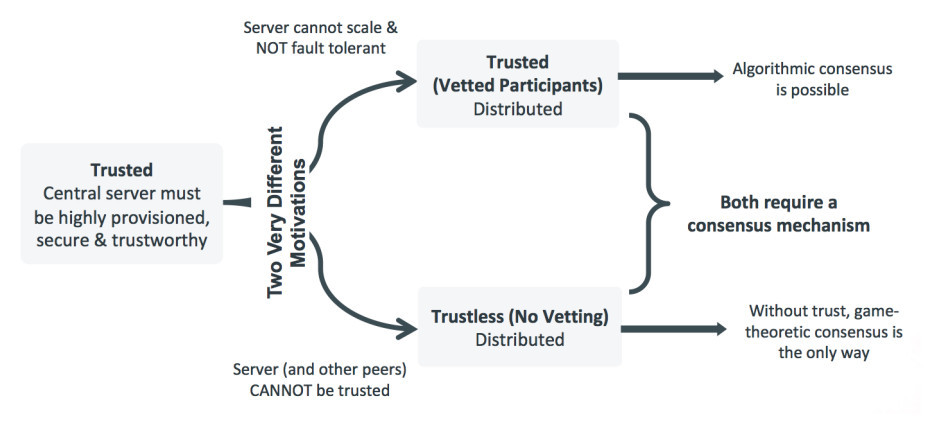

Two very different motivations of these two communities are depicted as a fork in the evolution of computing:

What is common to client-server and the two different paradigms of distributed computing? It is the notion of an intent log, a record of all clients’ intentions to modify the data store. Sufficient information about the operation that will be performed is written to the log so that it can be applied at any later time, even after a failure. This, sometimes in conjunction with other techniques such as shadow-paging [26], ensures recovery without data loss or corruption after a failure. Servers use consensus mechanisms to collaborate and keep this log consistent across all nodes. The intent log for the cryptocurrency world is the familiar blockchain, a single version of the truth that all nodes strive to keep identical. The intent log in the top fork of Figure 1 is the distributed ledger. The top and bottom forks have come to be known as the permissioned and public blockchains respectively.

Let us briefly consider how the double spend problem plays out in each of these three models. Consider a scenario where Alice’s account contains only one dollar, but she attempts to execute two transactions: one transferring her only dollar to Bob, and the other attempting to give the same dollar to Charlie.

In the centralized model, each transaction as it arrives, written to the log and gets executed. When the second transaction from Alice is attempted, the system recognizes that her account balance is zero during the validation phase and ignores the transaction, rendering it to a no-op.

In the permissioned model, exactly the same sequence of steps takes place because the consensus mechanism ensures that it delivers the two transactions in precisely the same order at all server nodes, first to Bob then to Charlie or the other way around. All servers process the first transaction by recording the intent followed by the successful execution of that transaction. They then update the local ledger accordingly. As with the centralized model, when the second transaction from Alice is encountered, after consulting the local copy of their ledger each node independently realizes that there is no remaining balance in her account and would treat it as a no-op.

For public blockchains, the validation process is a bit more complicated. When Alice attempts to spend same dollar two (or more) times, the validators (i.e. miners) would consider each of her transactions along with other transactions that make up a block and attempt to validate all transactions in that block. It is conceivable that validators would see Alice’s dollar as unspent when they go to check the existing ledger, but once one of them adds a block containing a transaction from Alice, no one else can add any block containing other transactions from Alice to that fork of the blockchain. It is true that two blocks containing two different transactions from Alice could get added to two separate forks, but the cryptocurrency protocols make sure that as time progresses only one branch survives as the blockchain evolves. There are can be multitude of problems that can arise with this model of arriving at consensus to maintain the blockchain ledger. Next section examines some of these fundamental limitations with this approach.

Destination Disintermediation

Consider the difficulties inherent in operating in a totally trustless environment. Let us take cryptocurrencies as an example. Many papers have been written explaining how some popular cryptocurrencies, e.g. Bitcoin, work. For example, see [88]. Instead of reproducing the inner workings of these platforms, let us look at some key mechanisms that are at play, the rationale for their inclusion, and why they are inextricably woven into the platform design. In our discussion, public, permissionless and trustless are used interchangeably and refer to the same paradigm.

The Anathema of Proof of Work Since the very premise of cryptocurrencies is elimination of all trust, the design of the underlying network must provision for zero trust between every pair of peers. Therefore, it cannot function within the realm of the client-server paradigm, because the server cannot be trusted by any of the clients. As mentioned before, a move to the distributed model is forced in the zero-trust requirement. Stated differently, the distributed network backing a cryptocurrency must allow any user onto the network, has no idea how many there are at a given time and must assume they behave in an unpredictable, possibly malicious, manner, the Byzantine fault tolerance (BFT) model. It is intuitively obvious that unless a majority of nodes are honest, nothing useful can be done on this network. We will formalize this notion shortly and in fact show that even if honest nodes are present in a majority, that is not enough to prevent bad things from happening. Since we are limiting our discussion to currencies, clearly a ledger of transactions must be maintained. In a distributed setting, this ledger is the “globally accepted truth” that states the ownership of currencies at a given point in time and the transactions that have taken place thus far that led the ledger to the current state.

With this as the backdrop, let us explore the following questions:

- Why can’t public blockchains use the well-known Byzantine consensus algorithms for distributed ledger maintenance? These require a super-majority of honest nodes. Unfortunately, even with one malicious participant, they will not work because that one node can create several fake identities, forcing the number of honest nodes to a minority - the Sybil attack. Since public blockchains, like Bitcoin, by design do not want to know the identities of its participants for privacy reasons, the algorithmic approach is unsuitable.

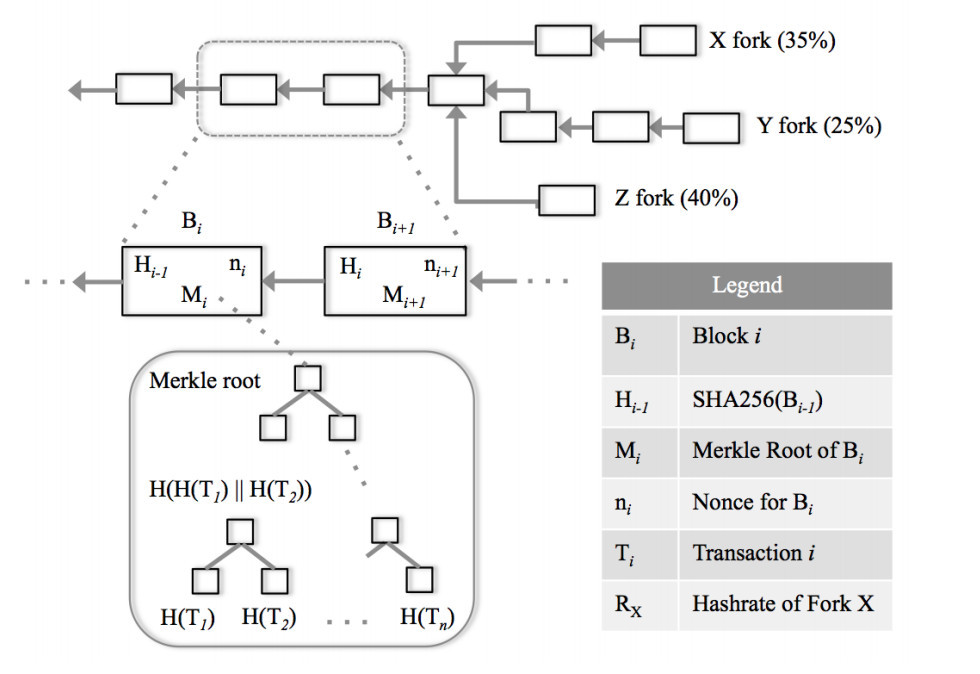

Why do public blockchains waste so much energy in maintaining the ledger? In one word, it is for immutability. In a public, distributed setting achieving immutability is a tall order. In computer science terms, the only operation that can be performed on a ledger is the Append operation. This naturally leads to the definition of blockchain. In a blockchain, every block includes a hash of the previous block, with the exception of the very first block - the genesis block B0, resulting in a chain of blocks. Blockchain is reminiscent of a linked list data structure with the current block at the head and the genesis block as the tail. For an excellent visual demo, see [35].

So, if a bad actor, Alice, decides to “rewrite the transaction history” and tamper with the block, then all she would have to do is alter that block, compute the new hash, cascade this change to the remaining blocks all the way to the current block, and broadcast this new tampered ledger. It does not take much effort for Alice to mount this attack, because computing a hash does not require much computational work. While Alice is carrying out this attack, Eve could also similarly tamper with the log to suit to her needs and broadcast to the network at the same time. An honest miner witnessing multiple plausible ledgers would have no way of knowing the correct way to extend the ledger. Therefore, without further safeguards this preliminary version of blockchain design is worthless.

This is reminiscent of a problem that is familiar to everyone–spam. If there is no price to send an email, anyone can send an email to anyone and any number of times, flooding Inboxes with unwanted email. On the other hand, if the sender is forced to pay a small price analogous to a postage stamp, say in CPU cycles, before she is allowed to send an email, it would make the job of email spammers very hard, without unduly burdening a legitimate sender. All public blockchains implement a throttling mechanism to prevent “ledger spam.” In a nutshell, this is the rationale for requiring Proof of Work when attempting to add a block to the chain. Proof of Stake is another well known throttling mechanism.

Bitcoin’s answer to realizing a tamper-proof ledger is to require the inclusion of a random string (known as number used once, abbreviated nonce) in each block so that the new block to be added to the ledger along with a proper nonce when hashed produces a binary string that has a specified number of leading zeros. The onus of finding the nonce is on the network participant who would like to append a block to the ledger, a miner. Finding a nonce satisfying the leading zero property, by necessity, requires a brute-force search, known as the mining process. Miners are incentivized for their effort; in fact the very first transaction of every block is the incentive given out to the miner. To get an idea of the effort that is needs to be expended, with SHA256 as the hash algorithm and the system requiring k leading zeros, where k = 40, a miner would have to try on an average one trillion nonces before finding a suitable one. Currently k = 18. To maintain a constant transaction rate, one must progressively increase k to offset the continuously improving computing speeds. Once a solution is found, the new block B is broadcast to the network. Other miners, upon receipt of B, first verify that the transactions and the proof of work included in B are both legitimate. Other miners tacitly express their acceptance of B by working on extending that the fork to which B was was added. As can be seen from this description, the ledger could evolve along multiple paths. As per bitcoin protocol, the surviving branch is that one which is the longest, has the most number of blocks. With this safety measure, to alter a block’s content, a new nonce for that block and for all subsequent blocks has to be recomputed. It should be clear from this discussion that the block that is closer to the genesis block is unlikely to be forked and “sidelined” compared to the one that is closer to the ones that have been just added.

A malicious user in a bitcoin network can try to defraud people by creating a fork by entering into a race with other miners, create an alternate ledger and potentially win the race, but at a tremendous cost in CPU cycles. On the other hand, the same work directed towards honest mining can result in possibly bigger profits, by way of incentives. Hence trying to subvert the system is generally not in one’s interest. Therefore, the incentive mechanisms that are put in place rely on game-theoretic principles for the correct operation of Bitcoin and assume all miners behave in a way that is profitable to them. This is called the Nakamoto Consensus. As a corollary one can conclude that the Bitcoin ledger is a probabilistic ledger, i.e. as more and more blocks are added to a given block, it will get progressively harder to purge that block from the ledger. Blocks closer to the first (genesis) block have a higher probability P of being a “permanent” part of the ledger than the ones that were added most recently, P(Bi) > P(Bi+1), for all i ≥ 0. Hence it is worth noting that the consensus (and the state of the ledger) achieved by this means is probabilistic.

One immediate negative consequence of the proof of work mechanism is the dampening effect on throughput, the number of transactions per second: Bitcoin currently can do in the range of 7 tps. Unless there are some radically new designs, it is not clear how public blockchains are going to disrupt the entrenched power structure and challenge the likes of Visa or Mastercard that operate at several orders of magnitude higher than this. Furthermore, some recent credible published sources have concluded that bitcoin mining annual electricity consumption is comparable to that of Ireland (∼24 TWh), and is projected to reach the annual consumption of the Czech Republic within a year [55]. This is simply not sustainable. The bad news for bitcoin does not end here; it is far from achieving the ideal of a total trustless environment, as the next subsection shows.

In Miners We Trust

Let us now consider the role played by miners in public blockchains. First a thought experiment: imagine a blockchain with a single miner M. If M buys a something from Alice, writes this transaction T to the ledger, Alice inspects ledger, waits until a few more blocks are added after the block containing T and releases the goods to M. The miner M later, could use the same coin and buy something else from Bob by writing a different block with this new transaction to the ledger and insert it before the block that contains T, thus forking and sidelining the branch that has the block containing T. Since there are no other miners trying to compete and extend the original branch, he can do forking after he receives goods from Alice. This is called the double-spend attack and it stems from the fact that M has too much control on the mining process.

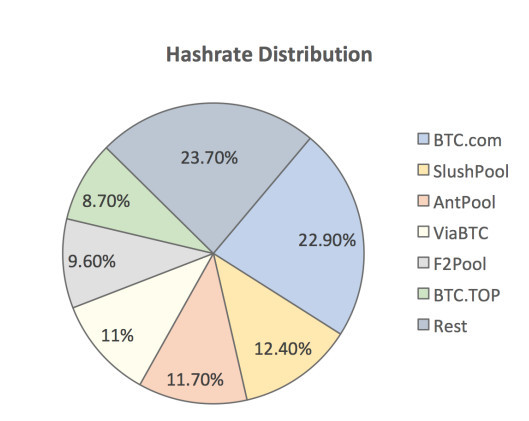

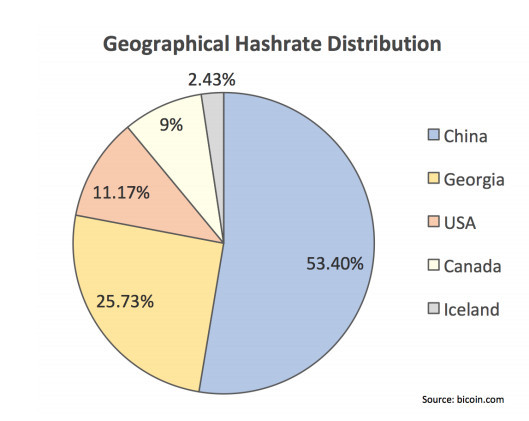

Though the previous discussion is not a proof, it should convince the reader that the more mining power a miner (or group of miners) controls, the higher influence he has in deciding which fork of the ledger grows faster. Having a majority control insures higher mathematical probability of favoring one of the forks to become the longest branch. Note that while the intent of bitcoin is to keep the currency distributed, currently if any 5 of the top 6 miners decide to collude, they would get to control over 51% mining power

which would put them in a position to selectively exclude blocks, or fork the network and mount a double spend attack. See Figure 3. What is ironic is that this advantage can be had even with only 40%, as long as the remaining 60% is split in a way that no one else has 40% control. In other words, the so-called 51% attack is a misnomer; it can potentially be carried out with even less than 51%. Stated differently, if the adversary controls 51%, then he is guaranteed to have an advantage, i.e. it is a sufficient condition but it is not necessary. Figure 2 shows three forks X, Y and Z, and their respective hashrates RX, RY , and RZ that are being expended along those forks. Assume all miners are working on one of theses three forks, i.e. RX + RY + RZ = 100%. For any set of values such that RZ > max(RX, RY ), Z fork, though not the longest fork at the moment, could eventually prevail. (For example take RX = 30%, RY = 30%, and RZ = 40%. )

Mining power in the hands of only a few was thought of only as a theoretical threat until recently, but has proved to be very real: in May 2018, Bitcoin Gold exchange suffered from such an attack and lost over 18 million dollars [93]. This article notes “...But the rise of massive mining conglomerates that deploy specialized computer equipment has seen a growing centralization of mining in recent years.” Some points are noteworthy: 1) there is nothing magical about 51%; in fact, such an attack can be carried out with a lesser percentage, but the probability of success would be smaller, on the other hand 2) it would be apparent to other users that the network is being attacked so that they could take a corrective action. A detailed discussion on the security risks posed by mining and other research directions surrounding Bitcoin can be found in [28,54].

No discussion on public blockchains is complete without talking about the second most dominant cryptocurrency, Ethereum and the associated smart-contract ecosystem. Bitcoin can be thought of as a pure digital currency and the transactions are direct whereas Ether, the currency of Ethereum, changes hand when the previously agreed upon conditions are met. Though smart contracts were popularized by Ethereum, these were conceived way back in 1996 by Nick Szabo [100].

A simple example of a smart contract is the implementation of a sports bet, similar to an office pool, say on an event like FIFA World Cup, without the use of a trusted-third party. This program would have logic to accept bets from different individuals, wait until the championship is over and distribute the pool to the participants who guessed the winner correctly. Several business rules could be incorporated into the contract. For example, in the event that no one guesses the correct outcome, bets could be returned to the participants or awarded to those who correctly guessed the runner up.

Ethereum uses Solidity, a Turing-complete language, to write smart contracts. In comparison Bitcoin uses a fairly limited opcode based scripting language. An excerpt of a smart contract built around a coin toss written in Solidity is included in Appendix A.

Current throughput of Ethereum is not that different from that of Bitcoin. The Ethereum community considered trading proof-of-work mining in favor of proof of stake (PoS), where the network is secured by the

owners of tokens, but decided that a pure PoS solution brings its own set of problems. Second generation Ethereum is trying to address these following four current limitations [95,107]:

- Mining: Moving away from pure PoW to a hybrid scheme that combines PoS, in a new protocol called Casper,

- Privacy: Tightening privacy measures by limiting the visibility of the ledger,

- Scale: Achieving higher scale through sharding (dividing a blockchain network into several smaller component networks capable of processing transactions in parallel) and by creating child blockchain networks within the main blockchains to process information more swiftly, dubbed Plasma, similar to Bitcoin Lightning Network, and

- Smart contract security: Note that any time a computer needs to run a program whose origin cannot be trusted, there is a concern. Different approaches have been proposed to deal with this concern. The second generation is exploring the use of a Python-like smart-contract programming language, “Viper”, with the goal of enabling the development of safer Ethereum applications.

With these changes implemented, the Ethereum founder believes that it is on its way to millions of transactions per second. Blockchain applications seem to have found application in the financial technology (fintech) sector [8, 9]. Ethereum with its Smart Contract Eco System is considered to be well suited in this domain. Ethereum specification for enterprise deployment does not prescribe any particular consensus mechanism and leaves it up to the implementer. It has been realized with different consensus methods including Raft [92], an implementation of Castro-Liskov method [38] known as Istanbul and Proof of Authority (PoA) consensus [108]. In PoA, nodes take turns and propose. It should be noted that distributed consensus, as pointed out in Section 7.1, is too complicated to lend itself to a simple round-robin scheme. Two technologies that compete with Ethereum in the permissioned space include R3 Corda [34] and IBM Hyperledger Fabric [64]. See also [103].

Digital Meets Physical

Some trust is just unavoidable. Consider the problem of tracking newborns in a hospital ward [102]: a baby should not be mistaken for another at any cost. Given the tamper-proof, auditable nature of blockchains, it is tempting to think that they are ideally suited for this problem. We contend that “bootstrapping” the blockchain is not easy without some trust in people involved. Let us breakdown the problem from the beginning. To create a record that unmistakably identifies a baby, an immutable characteristic such as the digital hash of the baby’s DNA should get recorded on the birth certificate. In addition, other pertinent information such as the time of birth, parents’ names could be included as part of this digital birth certificate.

Let us examine this one level deeper. What is the process to create this digital fingerprint? Some biological information from the baby must be extracted, sent to a lab, and a privacy-preserving digital representation of this data should be created. The chain-of-custody involved in creation of this record leaves no choice but to trust the people involved in this process. The authors term this the “The Last Mile Problem” of blockchains. In other words, as Stinchcombe [99] correctly observed.

It’s true that tampering with data stored on a blockchain is hard, but it’s false that blockchain is a good way to create data that has integrity.

Now consider another problem that plagues most cryptocurrencies: centralized exchanges that facilitate currency transfers, between crypto and fiat currencies. These are an attractive target for attackers and a constant source of problems. This could also be the place where privacy breaches happen. Indeed, most of the attacks on cryptocurrencies we hear in the news take place exactly at this boundary [75, 86]. It has long been known that illegal activities happen at exchanges [61], spurring new regulations from governments for exchanges to monitor money-laundering and other illegal activities. Ethereum co-founder Vitalik speaking to the necessity of centralized exchanges bemoans [12]:

”I definitely personally hope centralized exchanges burn in hell as much as possible. In practice, particularly on the fiat to crypto side, it is very difficult to decentralize because you ultimately are interfacing with the fiat world, and the fiat world is one that only has basically centralized gateways. . . There are valuable services being provided there that are very hard to decentralize.”

Vitalik’s disdain for centralized exchanges not only stems from the fact they wield so much power in deciding which currency gets listed, but also their monopoly power in dictating the listing fee. What are the alternatives to centralized exchanges? Trading cryptos for other cryptos could be done using distributed exchange (DEX) solutions. But, they have their own host of problems [10]. With no central coordinator, the onus of making trades and transferring money is completely shifted to the user. DEXs are not regulated or insured. This level of disintermediation clearly does away with any kind of customer support; the users are on their own if there is a problem. So the holy grail of total disintermediation may after all be unattainable.

But even if it can be realized partially, which would reduce the cost and time for settlement, it is still a win. To summarize this section, operating networks in a trustless environment is challenging. In particular, one has to contend with:

- The payment mechanisms needed to modify the ledger, e.g. PoW, and its throttling effect on the transaction rate to thwart Sybil attacks,

- Trusting miners to do the right thing,

- Coping with eclipse attacks [62] in the event of network partitions,

- The last-mile problem,

- The onus of guarding the valuables is placed on the user, i.e. the custody problem [91] and

- The lack of customer support

These are significant limitations. In the next section, we will see how to overcome these obstacles by relaxing the zero-trust requirement, yet keeping the most attractive part of public blockchains - a distributed, immutable ledger. This is the world of permissioned blockchains. Before we do that, one unintended consequence of immutability is worth mentioning - once content makes it to the blockchain, purging it is practically impossible, as hard as tampering with the legitimate history. In other words, there is no Undo button. Why would anyone want to edit the ledger, after the fact? For a couple of reasons: through human error, bad data might make it to the ledger, or an attacker inserts vile content. The current bitcoin ledger contains objectionable, in some instances illegal, content [81] in the optional fields of the block, raising the spectre of illegitimacy by mere possession for mining purposes. There may be a solution in the permissioned world, but who is authorized to edit the ledger, and how it is done would obviously vary depending on the situation.

It’s Easier To Ask Permission Than It Is To Get Forgiveness (Rear Admiral Grace Murray Hopper)

We flip the quote by Hopper and demonstrate that it is better to get permission first, rather than to allow anyone onto the network and face the consequences. By restricting access to participants who have been vetted, it becomes easy to build exciting applications based on blockchains, in this permissioned setting. Given that blockchain innovation came from cryptocurrencies and challenged the financial industry’s current practices, the banking sector first considered it for adoption. Here are three oft-mentioned use cases from this sector:

- A government bond that is about to mature which if written and maintained with smart contracts, will disburse the maturity amount to the bearer upon maturity,

- Shorter settlement cycles for corporate clients from syndicated (multiple lenders) loans. Estimated savings are between USD $2 billion and $7 billion annually [8], and

- Better efficiencies in claims handling in personal motor insurance industry by automation and reduced processing overheads. Estimated savings are USD $21 billion [8].

The successful startup company Everledger [4] is in the business of guaranteeing the provenance (the place of origin and ownership history, commonly used as a guide to the authenticity or quality) of diamonds. There are three important characteristics that are unique to this use case. First, it is a highly-valued asset, like a parcel of land, that appreciates over time. Second, due to the high stakes involved, this business attracts fraudsters, who inject fake diamonds into the supply-chain. Finally, there are multiple intermediaries and stakeholders with different motivations from the time diamonds are mined to the time they belong to a piece of jewelry worn by someone. Some example intermediaries are government authorities needing to collect export/import taxes, owners of the diamond mines, jewelry makers, jewelry buyers etc. The initial record contains a digital fingerprint made from several metadata points, the laser inscription on the girdle, color, clarity, cut, and carat weight of the diamond. Certification regarding the regulation around conflict diamonds could be made part of this record. Once this is available, the ownership as it changes hands can be maintained in a distributed ledger, and made available to participants who are privy to this information. The auditability and accountability needed to protect the supply-chain makes this a perfect use case for the permissioned blockchains [65]. But, Stinchcombe’s [99] warning about the difficulty of getting correct data onto the supply-chain must be borne in mind with this application.

Finally let us consider the land registration process. After watching people in Haiti fighting over land ownership when their paper ledger got destroyed in a hurricane, a blockchain solution looks very attractive. It can also facilitate the identification of assets seized on tax liens, abandoned properties, or those without ’good titles’ – those that are likely targets for fraud. Let us see how the double-spend problem in this context manifests itself, and how the blockchain solution prevents the owner from selling the land to more than one person. The potential buyer could register a smart contract with the local registrar to release the funds from his bank once the ownership has been transferred. The registrar, after verifying the existence of funds in escrow, attempts to add this transaction to the blockchain. There can be two outcomes at this point. First, if the seller is the rightful owner of the land at the time of addition, everything goes as expected, the buyer gets the land and the seller receives the money. On the other hand, if the seller was never an owner of that land or is no longer an owner at the time, the smart contract voids the sale and informs the escrow to return the money to the buyer. The question is whether the registrar or the seller can purge the block containing the previous sale and defraud this buyer? That may be possible in one copy of the ledger, but if the ledger is replicated across different servers, then it is practically impossible to change all the copies.

Finally blockchain applications in the permissioned setting have also been found in copyright protection [14]. The next section walks through a detailed case study of building a gold exchange prototype in the permissioned model.

Consensus is the Key (Rest is Mostly Window Dressing)

In this section, we describe a simplified gold exchange, AuX. The primary motivation for its inclusion here is to illustrate the ease with which highly available, secure, fast applications can be built provided a distributed consensus engine (DConE) is available.

First let us start with the requirements for such an exchange. The trading platform AuX, should be capable of accepting trades, either asks or bids, prioritizing them primarily on the competitiveness of the price and secondarily on the time of submission when multiple trades with the same price are encountered. Each customer must be identifiable by a unique ID.

It is easy to encode business rules such as preventing buying and selling to oneself, or trades that exceed the current account balance. Another rule may be when a bid trade Xb is received that is higher than the current best ask price Xa, then much like a market order, to fulfill it immediately, but at price (Xa + Xb)/2, favorably beating the expectations of both the buyer and the seller. All transactions could be levied a small transaction fee that is intended to go to the platform provider as an incentive for providing the service. One could model an algorithmic trader to take the role of a market maker to provide liquidity.

In particular, we will evaluate the following three properties of different solutions:

- Privacy: Traders’ identity and their activity should be private

- Non-Repudiation: Once a trade is placed, it cannot be disowned by the trader who placed it

- Confidentiality: All communication between the client and the platform, and within the platform must remain encrypted

Trades can be fulfilled as shown below:

Algorithm Settle Ask (p, q) ✄ Bid(p, q) is similar

Require: Trade T from client C: Sell q units of gold at price p per unit

- Idempotence Check if T was already processed. If yes, return .

- Business Logic Apply common-sense rules e.g. make sure that C’s account currently has q units of gold, C does not have an outstanding Bid with price more than p. If T fails these tests, return .

- Fulfill

- (a) Insert T into Ask Min Heap Amin

- (b) if the best Ask price pask as indicated by the root node of Amin is ≤ the best

Bid price pbid as

indicated by the root node of Bid Max Heap Bmax then

- i. delete the root nodes of both Amin and Bmax, and

- ii. write this pair of trades to the ledger along with the settlement price as (pask + pbid)/2

- return

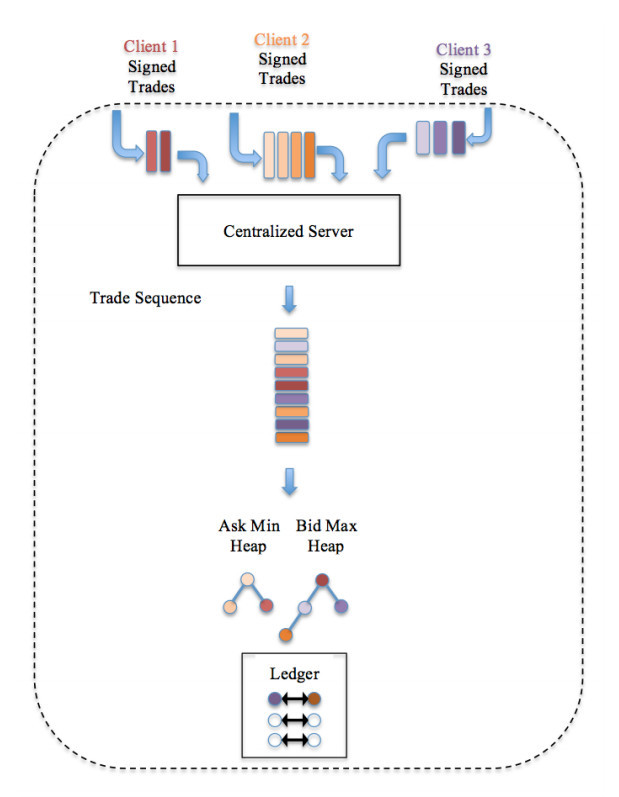

A Simple Implementation

Imagine a platform implementing the algorithm above on a client-server model. Server S operates like a typical current day brokerage firm. The registration process could simply be C generating a public/private key pair of an asymmetric cryptographic scheme, but revealing only the public key part of the pair to associate with an account owned by C. After registration, a client C places a trade T with S. The server attempts to fulfill T immediately; if it cannot, T is persisted to the collection of outstanding trades. Once a matching trade T' arrives, S would attempt to match T against T' , write the transaction between T and T' to the ledger, and notify the bidder and seller of its action. Traditional database technology insures the transactional integrity against component failures during this process. Once fulfilled, S could make the relevant portion of the ledger available for inspection upon demand by C at a later time. See Figure 5.

Does this model satisfy the privacy requirement? The answer depends on the regulatory environment in which the exchange is operating. If the regulation demands that all clients be associated to some form of government issued identifier at the time of registration, then the exchange would have to comply and demand a proof of identification from every client. This is the model used by traditional banks and brokerages. On the other hand, some emerging blockchain currency platforms allow the client’s account to be linked with only the public key portion of a key pair generated by the client. (Note that there have been statistical correlation attacks on the privacy proffered by this model [24].) The non-repudiation requirement could simply translate to insisting that C sign each trade using the private key corresponding to the public key that is associated with C's account. To meet the confidentiality requirement, trades can be securely transmitted from C using the HTTPS protocol. In addition, S must exercise care while executing and storing transactions, and also at the time of revealing the relevant portions of the history only to the legitimate parties by making sure that they are entitled to receive this information. These are all well-known security best practices, and already exist in most blockchain platforms.

We have described a solution without any need for a consensus mechanism. Where does it fall short? As mentioned before, this is the centralized-trust model: server S is assuming the role of a TTP. But, given that operating in a totally trustless environment is hopelessly difficult, what else is wrong with it? It suffers from a single-point of failure and offers no crash resistance whatsoever. A use case such as a gold exchange must promise very high availability. This is where consensus comes into play. As trades are submitted from different geographical locations of a wide-area network, they are replicated across all the participating nodes, and each node maintains a global copy of the heaps Amin and Bmax. At the time of trade fulfillment, each node independently deletes the respective trades from the heaps and persists the transaction to the local ledger. This way, even when f nodes fails in a network of 2f + 1 participants, the AuX platform can make progress and maintain a consistent distributed ledger. Implementation details can be found in Appendix B.

Making AuX Production Ready

Our description of a simplified exchange exposed several questions to ponder before it can be deployed into production:

- Security Though the nodes are vetted while joining a distributed network, if any one of them gets compromised, then the entire system gets doomed. A chain is as strong as its weakest link. Therefore, the security of the exchange does weaken when one server is replaced with a collection. It is clear that there is built-in Sybil resistance in a permissioned setting, but what other attacks are possible if one of the nodes goes rogue? That is, Byzantine, permissioned model requires careful study.

- Privacy Assume nodes running DConE are registered and vetted, all nodes must comply with the local regulations and collect identifying information from their clients. It also appears that if the exchange is operating across international borders, the most restrictive regulation is going to prevail across all nodes of the system.

- Scale For a platform that spans a wide-area network, what are the scaling and performance issues, particularly under failure and partition scenarios? What exactly is needed to realize low latency and high throughput? How is the ledger stored and retrieved as it grows unboundedly?

- Disintermediation Does the proposed exchange promise any shorter fulfillment times when operated at a global scale? Is it a cheaper alternative to the existing methods? What about the chain-of-custody: How are the assets secured and transferred between different owners? Answers to these questions requires in-depth domain knowledge and is not central to this paper.

The Key to Consensus

Those who cannot remember the past are condemned to repeat it. (George Santayana)

Consensus has a long, rich academic history spanning almost 40 years. In this section, we will try navigating through the maze of consensus mechanisms. There is renewed interest in this problem because of the advent of blockchains. There appear to be several surveys on consensus, at least one every decade for the last 4 decades [23,37,56,98]. Consensus is at the heart of many other distributed computing problems and can be used as a primitive to solve them.

The entities taking part in the consensus algorithm can come from {processes, threads, processors, nodes, servers, clients} or more abstractly from {actors, agents, participants}. The meaning should be clear from the context. Note that there may be multiple logical nodes per physical machine.

If two separate nodes running identical software guarantee the same results, we say that the program is deterministic. Non-determinism could enter software in the form of program execution that depends on the value of a random number, or on local time, etc. We limit our discussion in this paper only to deterministic software. We will also assume that nodes have unique identities and that there are no anonymous nodes.

The distinction between synchronous and asynchronous is important. In the former model, it is assumed that there is a global clock, the message delay is bounded and that bound is known a priori. This leads to simplification of many algorithms. For example, from the absence of a message one may be able to infer the state of the network. There is extensive literature based on this model. See [84] and the references therein. This work may be useful for multiprocessor parallel computers, but for algorithms that need to run wide-area networks these assumptions are too restrictive. In other words, the asynchronous model assumes that the message delays are finite, but unbounded and unknown. Also, no assumption is made about the drift of local clocks.

Let us now look at the security and fault-tolerance model. In the fail-stop model, nodes fail by stopping. When a failed node comes back up, it would retain its identity. Fail-stopped nodes could take arbitrary time to come back alive, or they might have been permanently decommissioned. The messages sent to a node when it is down are simply lost. In this model, nodes, though unreliable, are nonetheless assumed to be not malicious.

For financial applications, such as an online gold exchange, while it may be reasonable to assume that every participant has been vetted at the time of joining, it is unreasonable to assume that they stay trustworthy forever. As mentioned before, a chain is only as strong as its weakest link. Once one of the nodes gets compromised and starts to act maliciously, the fail-stop model is completely inadequate. To account for malicious nodes, we need to define the Byzantine fault tolerance model wherein no assumption is made regarding the behavior of nodes, i.e. nodes could be malicious.

Properties of correct consensus are as follows - given a set of nodes, each with an initial value:

- Termination All non-faulty nodes eventually decide on a value,

- Agreement All non-faulty nodes that decide do so on the same value, and

- Validity The value that has been decided must have been proposed by some node

Agreement and validity are safety properties, while termination is a liveness property. It has been proven that time-bounded consensus, even in the asynchronous fail-stop model, is not always possible [57]. The solutions we see in the literature typically only guarantee eventual liveness or employ randomization to get around this impossibility result.

One easy way out of solving a distributed computing problem is to reduce it to a centralized solution. It can be done like this:

- The nodes elect a leader.

- The leader does all the computation. Since it is now reduced to a sequential model, this step is simple, and

- Finally, the leader broadcasts the results from the previous step.

There is a definite lack of aesthetic appeal to this approach. This solution is not symmetric. If the elected leader keeps crashing, the nodes are going to spend most of the time electing a leader instead of doing anything useful. From a security standpoint, the leader must be fully trusted. So, this approach might not work in the Byzantine case. Despite these limitations, leader-based approaches are used quite often in small scale replication solutions. Zookeeper’s implementation seem to use this approach [83]. The following text is taken from their website [1]:

“ZooKeeper is a centralized service for maintaining configuration information, naming, providing distributed synchronization, and providing group services. All of these kinds of services are used in some form or another by distributed applications. Each time they are implemented there is a lot of work that goes into fixing the bugs and race conditions that are inevitable. Because of the difficulty of implementing these kinds of services, applications initially usually skimp on them ,which make them brittle in the presence of change and difficult to manage. Even when done correctly, different implementations of these services lead to management complexity when the applications are deployed.”

In short, this method suffers from all the shortcomings of the trusted-third party solutions.

With these definitions and background out of the way, let us see what is possible. Given the importance of consensus, why are there no production-grade, scalable, open-source implementations, similar to the likes of gcc or Apache HTTP server? This leads us to the next subsection.

Implementing Consensus Algorithms is Hard

The consensus problem statement looks deceptively simple. In fact, when Lamport originally authored a solution called Paxos (named after a fictional legislative consensus system used on the Paxos island in Greece) and submitted it to a journal, it was rejected as insignificant [60]. Lamport went on to win the Turing Award in 2014. Paxos was mentioned as one of his significant contributions to computer science [74]. To date, it remains one of the best known algorithms for this problem.

Most people looking for an open-source implementation consensus turn to Raft. This algorithm was the outcome of Ongaro’s PhD thesis under the guidance of Ousterhout and was proposed as an alternative to Paxos. The authors justify their work by leveling several criticisms against Paxos. Here are some [92]:

“... Paxos is exceptionally difficult to understand ... We struggled with Paxos ourselves ... it does not provide a good foundation for building practical implementations ... Another problem is that Paxos uses a symmetric peer-to-peer approach at its core (though it eventually suggests a weak form of leadership as a performance optimization). This makes sense in a simplified world ... Paxos’ formulation may be a good one for proving theorems about its correctness, but real implementations are so different from Paxos that the proofs have little value ... Because of these problems, we concluded that Paxos does not provide a good foundation either for system building or for education”

Criticism in italics runs counter to the conventional wisdom in the distributed computing community and to the argument put forth in the previous section. How can a symmetric approach to distributed computing be a weakness? It should be a strength. Note that Ongaro not only got a PhD for his work from a toptier institution, but the Raft paper received the Best Paper Award at one of the premier conferences in computer systems. At about the same time the Raft authors were railing against the practical relevance of Paxos, a startup company [7] was formed with Paxos as the basis and proved its practical applicability to the distributed systems field. A few years later several suggestions for implementing Paxos appeared [33,82,104]. It is also worth noting that Raft was never published in a refereed journal with proofs. It was tested for bugs by a neutral third-party distributed systems validator, Jepsen, and found to have issues [69].

This does not appear to be an isolated occurrence. Another published work from a different top-tier institution proposes a solution for Byzantine fault tolerance [72,73], receives a best paper award from a firstrate systems conference, appears in a respectable journal only to be realized 10 years later that it violates safety. Here is a third example of an award winning paper with a liveness violation [78], discovered 12 years after its publication in 2005. This paper got published in an IEEE journal [79] as well! These issues and solutions were reported in [15].

From the foregoing discussion we can conclude:

- Consensus is hard [47],

- There is temptation to rush headlong into concocting home brew solutions, and

- It never pays to quickly “hack” something together, underestimating the importance of formalism and thorough reasoning

With these as the guiding principles, we are now ready examine the solutions that have been proposed for blockchains; the subject of the next subsection.

Consensus Mechanisms Galore

If you can’t solve a problem, then there is an easier problem (preferably related to the original!) you can solve: find it. (George P´olya)

The academic world is not alone: “The published, time-tested algorithms are hard to understand; let me invent my own. After all, how hard can it be?” bravado pervades the blockchain industry as well. A high-level survey on various recent consensus implementations is given by Colyer [45]. Also, see [19] and the Related Work section in [23,96]. There is a rich set of academic literature on using randomization, starting with the seminal paper by Ben-Or [16,25] in 1983. Since the publication of this paper, literally hundreds of papers have been written based on this approach. See [18,33] and the references therein. Unfortunately, some recent algorithms, e.g. Swirlds [5, 22] on which hashgraph blockchain is based, that use randomization completely ignore this entire body of work. Then there is PARSEC (Protocol for Asynchronous, Reliable, Secure and Efficient Consensus) [44,77] that claims to improve on Swirlds, acknowledges the prior art in randomized protocols, but in the conclusion they write “ ... makes very weak synchrony assumptions...” Shouldn’t that make the title PWSRSEC, with WS standing for Weak Synchronous replacing Asynchronous?

Multi-billion dollar financial blockchain company, Ripple built a hybrid consensus algorithm that is neither centralized nor completely distributed, where the number of servers is limited and all owned by Ripple. Furthermore, the original consensus in Ripple was controversial [67].

When Byzantine tolerance is required, one algorithm that stood the test of time is the one from Castro and Liskov [38] on practical Byzantine fault tolerance (PBFT). The blockchain product from IBM, Hyperledger 0.6, included PBFT as the default consensus mechanism, but surprisingly, and without any justification, replaced it with Kafka + Zab (Zookeeper Atomic Broadcast) ensemble [89] in Hyperledger Fabric [64]. Version 1.4 is slated to be released by the end of the 4th quarter and will offer Raft consensus engine for ordering [13]. One possible explanation for these changes could be lack of performance on a wide-area network[106]. (Refer to the discussion in Section 6 Zab’s inability to scale to a wide-area network.) Hyperledger claims a pluggable consensus engine. In Sawtooth, consensus is obtained using a trusted computing platform base and it is based on Proof of Elapsed Time (PoET). In this mechanism, each node starts a timer set to a random number. Every node waits to submit a proposal until the timer is done. The trusted computing base implemented in hardware insures that the creation of the timer and the submission of the proposal is tamper-proof. This idea has yet to prove itself in the marketplace.

Chandra and Toueg proved that atomic broadcast is theoretically equivalent to distributed consensus [39]. Rodrigues and Raynal show how it is used during crash recovery using quorums [97]. Renesse et al [105] do a comparative study of Paxos, Zookeeper Atomic Broadcast and Viewstamped Replication approaches to consensus and conclude that “... compute-intensive services are better off with a passive replication strategy such as used in VSR and Zab (provided that state updates are of a reasonable size). To achieve predictable low-delay performance for short operations during both normal case execution and recovery, an active replication strategy without designated majorities, such as used in Paxos, is the best option.” For financial applications that span a wide-area, Paxos appears to be the right choice.

To summarize, the importance of choosing the right consensus mechanism cannot be overstated. Don’t get carried away by the outlandish marketing claims regarding transaction rates. For permissioned blockchains, two algorithms stand out for asynchronous applications that span the entire globe: for crash-resistance Paxos is suitable; if Byzantine fault tolerance is also required, then a PBFT implementation as described in [59] or HoneyBadger [85] would be a good starting point.

Which Way is North?

These are truly exciting times for developing distributed computing applications. We will conclude this paper by looking at some tantalizing opportunities the recent developments have created and suggesting some future directions. Consider the sharing economy. Imagine a ride hailing service matching drivers with passengers, but without a multi-billion dollar corporation as the intermediary. This could conceivably be built on a purely distributed platform, just like the gold exchange described in Section 5.2, thus achieving true disintermediation resulting in significant cost savings to the participants. This level of disintermediation naturally raises many questions. Who is in charge of vetting the participants, i.e. doing the background checks, vouching for the drivers’ skill and the condition of their vehicles, checking the creditworthiness of passengers? What happens when things go wrong - who takes the responsibility? Bootstrapping distributed trust, similar to the concept of web-of-trust that was used in Pretty Good Privacy, but approaching it from a broader perspective [27,36] requires further study. Sufficient research along these lines in the shared economy space appears to be already underway to warrant a full-day workshop. See BlockSEA [11].

There is no doubt that government regulations directly or indirectly are going to play an important role in the future. We have already seen different countries take different approaches to Bitcoin – a few have banned it, some have allowed it, and it remains controversial in many others. It appears that most countries have taken the wait-and-see stance. Some, like South Korea and China, started out with a ban but now are working on regulating it. Notwithstanding this ambiguity, the cryptocurrency has grown at a very fast pace, amassing a total aggregate market capitalization value of a few hundred billion US dollars. One problem that seems to bog down the public blockchain world is its transaction rate.

There are copious claims on the Internet when it comes to blockchain performance: Hashgraph claims a transaction per second (tps) rate of 500,000 [22,66], Red Belly’s initial claims were even higher [6, 46], and Ethereum 2.0 can supposedly hit the 1 million tps mark sometime this year [95,107]. Note that when Red Belly repeated the benchmarking experiment with a more realistic setup, the number dropped by a factor of 20, to 30,000 [90]. These performance numbers are impressive, but could one buy a cup of coffee at the local cafe any time soon using any of these technologies? Probably not. What do these numbers mean for blockchain applications, if anything? What is the latency of these different methods? While there have been some studies comparing the performance of a few selected blockchain implementations against traditional databases such as BLOCKBENCH [49], this field could benefit from developing a comprehensive benchmarking standard. A study guided by scientific principles would be a worthwhile endeavor to establish credible performance standards for blockchains while clearly spelling out the trade-offs. The results would most likely differ based on the application domain.

How can Ethereum 2.0 [17,95] make such bold claims about its scale? After all, doesn’t the PoW/PoS get in the way? As mentioned before, there are two architectural decisions that could pave the way to surmount the scalability obstacle: sharding and sidechains . The former is a well known technique for partitioning data. The latter however is unique to blockchains. Sidechains allow a secure transfer of tokens between two blockchains using cryptographic mechanism known as a two-way peg [20]. Lisk, a platform that enables developers to build and publish decentralized blockchain apps (DApps) using JavaScript, was the first to implement sidechains; each dapp lives in its own sidechain without interfering with the mainchain. The vision of the public blockchain community is to be able run social media platforms and Massively multiplayer online role-playing games (MMORPGs) on a totally decentralized, censorship-free communitydriven networks. Konstantopoulos makes a persuasive case for running DApps on sidechains [70]. Another innovation with a similar motivation is off-chain payments using the bitcoin lightning network [58], with Bitcoin as the arbiter, which claims several orders of magnitude improvement in transaction throughput. But, then there is a counterclaim that says that the desired level of performance can only be achieved using centralized banking hubs [94]. Needless to say, how exactly these innovations improve blockchains scale is not clear.

Privacy [53,71] on blockchains is an active area of research. It has been shown that using the public key of a key pair as a pseudonym is not quite enough. How are the privacy requirements of users reconciled against regulations? There are several open questions surrounding privacy that deserve attention from the research community.

Appendices

A Smart Contract Example in Solidity

Listing 1 shows an excerpt of a program in Solidity that implements a coin toss. Alice devises a contract consisting of a Coin Toss game. In this, she chooses heads or tails and commits her choice. Anyone, who guesses the committed value correctly wins the prize money. Bob, decides to play, and make a guess. The code needed to make sure that there are not multiple people wanting to accept the bet, Alice cannot change her original choice, and other safety measures are omitted for brevity. A completely worked out example can be found in [80].

contract CoinToss {

address public alice;

address public bob;

bool public bobChoice;

bytes32 public aliceCommitment; //commitment is SHA256( toss + nonce ) where toss = 0 or 1

function CoinToss (bytes32 commitment) public payable {

// Alice sets up a coin toss game

alive = msg.sender;

aliceCommitment = commitment;

}

function takeBet(bool choice) public payable {

// Bob takes the bet

bob = msg.sender;

bobChoice = choice;

}

// code for Alice to cancel if no player to bet

...

function reveal(bool choice, unit 256 nonce) public { // Alice Reveals

// code to insure Alice has not changed the commitment

if( bobChoice == choice ) {

bob.transfer(address(this).balance);

} else {

alice.transfer(address(this).balance);

}

}

function claimTimeout() public {

//Bob can claim time out if Alice refuses to reveal

require(now >= expiration);

bob.transfer(address(this).balance);

}

...

}

Coin toss example in Solidity

B AuX: Distributed Implementation of a Simple Gold Exchange

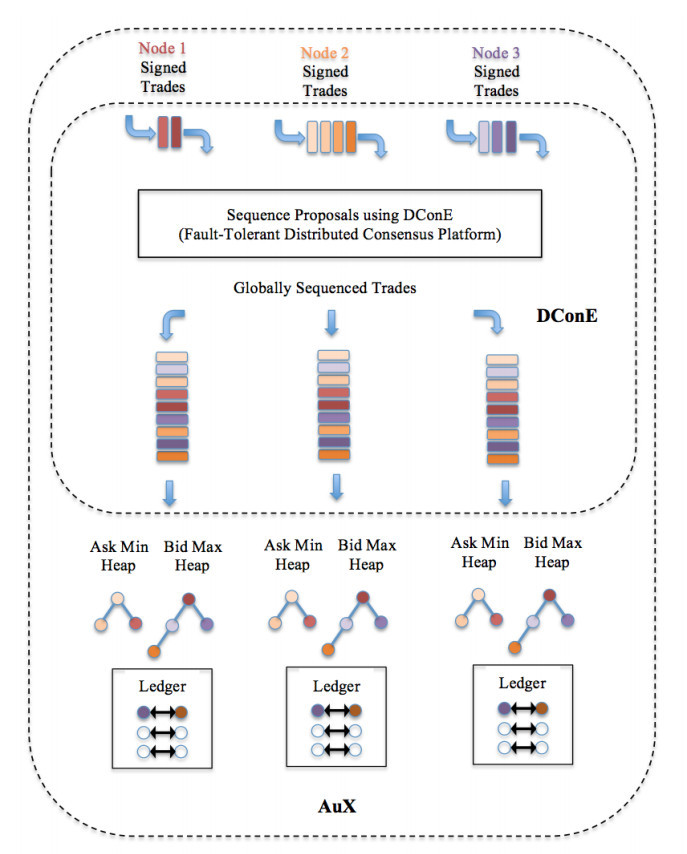

In a distributed implementation, there are several servers behind the scenes, giving the illusion of a single platform to the user. For the sake of simplicity, let us assume that none of the nodes are malicious, i.e. the non-Byzantine model. But otherwise, we would like to make it general enough, by assuming that trades can arrive from geographically dispersed servers asynchronously, contending for a spot in the global sequence to be considered for fulfillment The platform creates proposals out of these trade requests and hands them over to DConE to arrive at a consensus order, i.e. a global sequence of agreements, which then is made available in a fault-tolerant manner to all nodes. Once agreements are sequenced, the trades from them are used as input to an identical application software running deterministically, much like the description given in Section 6. Since multiple copies of deterministic software running on the same inputs, undergoes an identical set of state changes, it results in a replicated state machine producing an identical distributed ledger.

A key feature of this platform is crash resistance - progress can be made in achieving consensus about this global sequence order as long as a majority of the nodes are functioning at any given time. From the application programmer’s point of view, it is sufficient to submit DConE proposals, and consume agreements from the global sequence that are output. As an aside, note that it is possible to guarantee a FIFO order for proposals originating from the same server, that is, if a proposal p arrives before q at a server, then the agreement p appears before q in the global sequence, thus promising a notion of fairness.

Note the importance of the following fault-tolerant property which follows from the crash-resistance guaranty of DConE: Under node or link failures, and potential network partition, the order in which the agreements output by DConE is identical across all nodes. Therefore, as long as the application is deterministic, its state can be replicated reliably. This is essential to AuX correctness, viz. the pending trades and the ledger, remain consistent across all node instances. It is important to note that at any given instant, all nodes may not return the same output when queried - just that identical results can be observed when the system reaches a quiescent state, a moment in time when all the submitted proposals have been agreed upon, there are no new inputs, and every node instance is aware of all the agreements. For example, if a node crashes and comes back up, for the system to come to a quiescent state, this recovering node must learn the agreements it has missed while it was down.

Fulfilled orders are entered into a ledger, in which every entry at the very minimum should show the transaction ID, buyer’s ID, seller’s ID, and the trading price. One way to verify that the data across all nodes is identical under failures in a quiescent state is by querying and examining data from each node using a browser. Towards that end, a REST API could be built using which the ledger from various node instances can be examined while simulating different failure scenarios. Similarly the trading history of a customer can be queried.

Figure 6 shows the architecture of AuX with 3 node instances. AuX settles its trades by dequeuing agreements from the global sequence and processing them using Algorithm Settle Ask (p, q).

Privacy and non-repudiation requirements can be met exactly in the same way as in the client-server model. The only change needed to meet the confidentiality requirement is to require different node instances running DConE to communicate with each other using SSL.