Easily Migrate Apache Hive Metastore to AWS Glue Data Catalog

By Paul Scott-Murphy

May 13, 2021

Modern data architectures seek to enable data analysts, data engineers, and data scientists to quickly discover and use data to accelerate time to business insights. However, big data sets that are only available on-premises are not able to leverage the full benefits of cloud native, serverless, and cutting-edge services provided by AWS and its ecosystem of partners.

So how do data and analytics teams seamlessly migrate their data and metadata to the cloud to take advantage of the modern capabilities of these platforms?

The power of AWS Glue Data Catalog

The AWS Glue Data Catalog is a persistent, Apache Hive-compatible metadata store that can be used for storing information about different types of data assets, regardless of where they are physically stored. The AWS Glue Data Catalog holds table definitions, schemas, partitions, properties, and more. It automatically registers and updates partitions to make queries run efficiently. It also maintains a comprehensive schema version history that provides a record for schema evolution.

The AWS Glue Data Catalog is flexible, reliable, and usable from a broad range of AWS native analytics services, third parties, and open-source engines. AWS maintains and manages the service so that you don’t need to spend time scaling as demands grow, respond to outages, ensure data resilience, or update infrastructure.

Why metadata is important

One of the critical capabilities of any analytics platform is how it manages technical and business metadata: the information that describes the data against which analytics operates. Legacy platforms like Apache Hadoop use Apache Hive to store and manage technical metadata, describing data in terms of databases, tables, partitions, etc.

Pullquote: Continuous replication of data and metadata allows companies to maintain normal business operations on-premises while shortening the time it takes to capture benefits of AWS native analytics and machine learning services.

The metadata stored in Apache Hive is valuable information for any organization that wants to democratize access to data. Teams may also migrate metadata so users can discover, understand, and query the data. Without the ability to discover, prepare and combine datasets with an understanding of their structure, analytics-at-scale is an impossible task, and datasets that cannot be used have no value.

LiveData Migrator makes migrating to AWS Glue Data Catalog easy

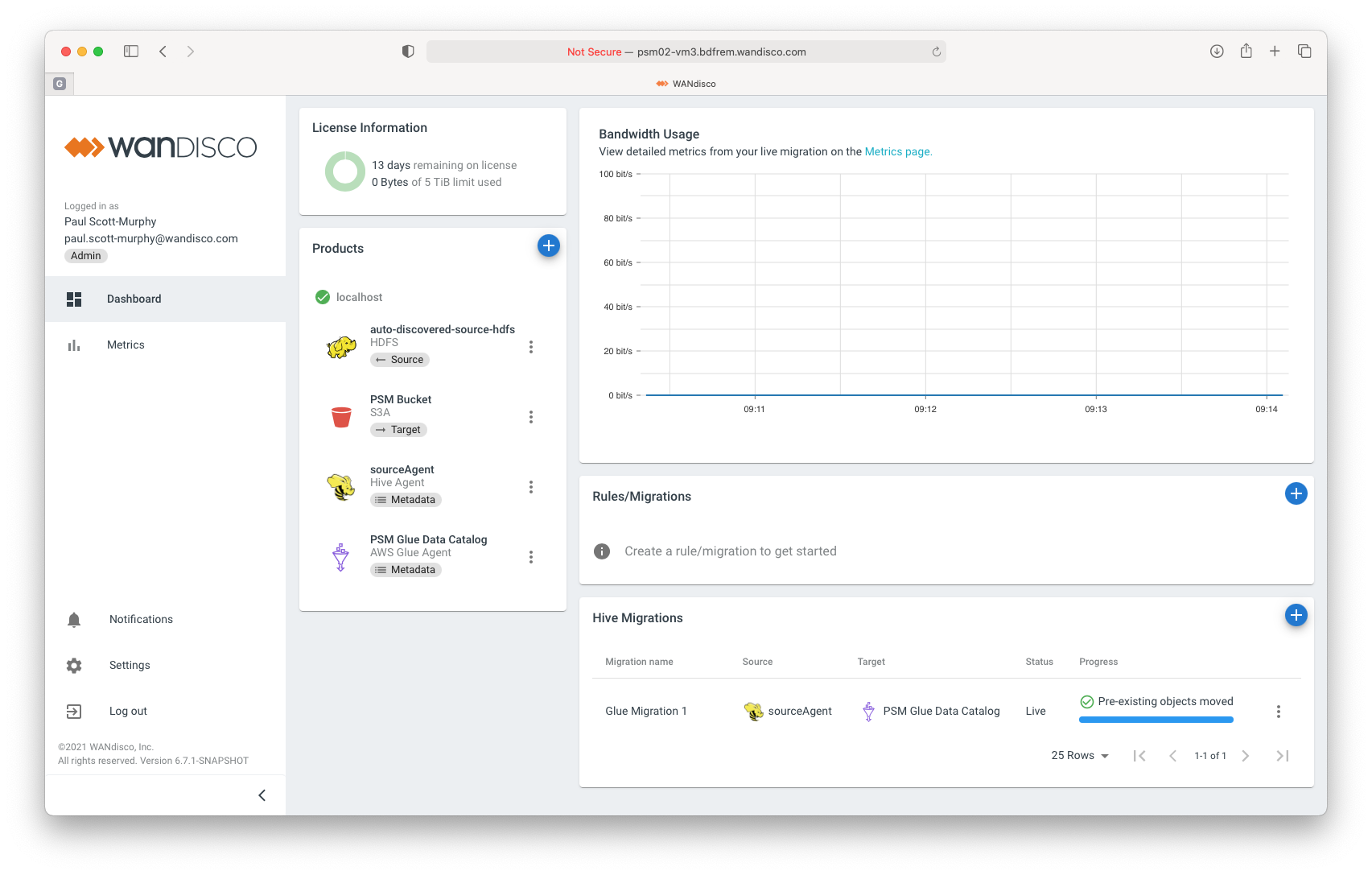

WANdisco provides the LiveData Cloud Services platform to migrate changing (“live”) data and metadata from Apache Hadoop, Hive, and Spark environments to the public cloud. From its inception, LiveData Migrator has supported migrating data stored on-premises to Amazon S3.

Learn more: Get started quickly with the AWS Prescriptive Guidance using WANdisco LiveData Migrator and learn how it enabled a multi-petabyte migration for GoDaddy.

In just a few steps, teams can migrate from an Apache Hive metastore residing on-premises, self-managed on AWS or other cloud providers, to AWS Glue Data Catalog. LiveData Migrator eliminates complex and error-prone workarounds that require one-off scripts and configuration in the Hive metastore. It integrates with a wide range of databases used by the Hive metastore making migration simple and painless. This means that the same strategy that has proved successful for organizations like GoDaddy when migrating hundreds of terabytes of business-critical data can now be applied to metadata as well. This simplifies big data migrations, greatly reduces business disruption during migration, and accelerates time to insight.

Simple to set up and get started

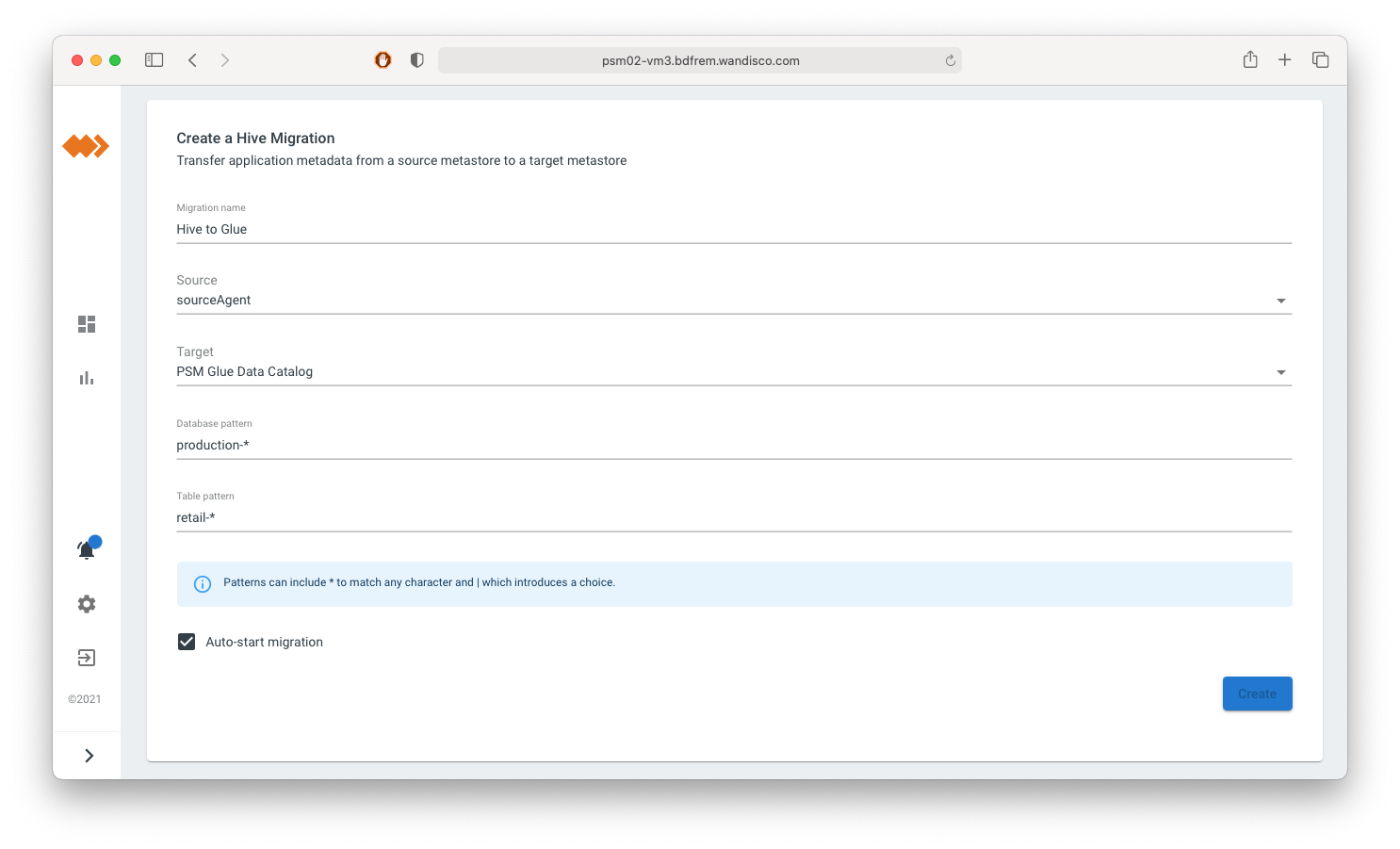

Migrate from an Apache Hive metastore to AWS Glue Data Catalog in two simple steps:

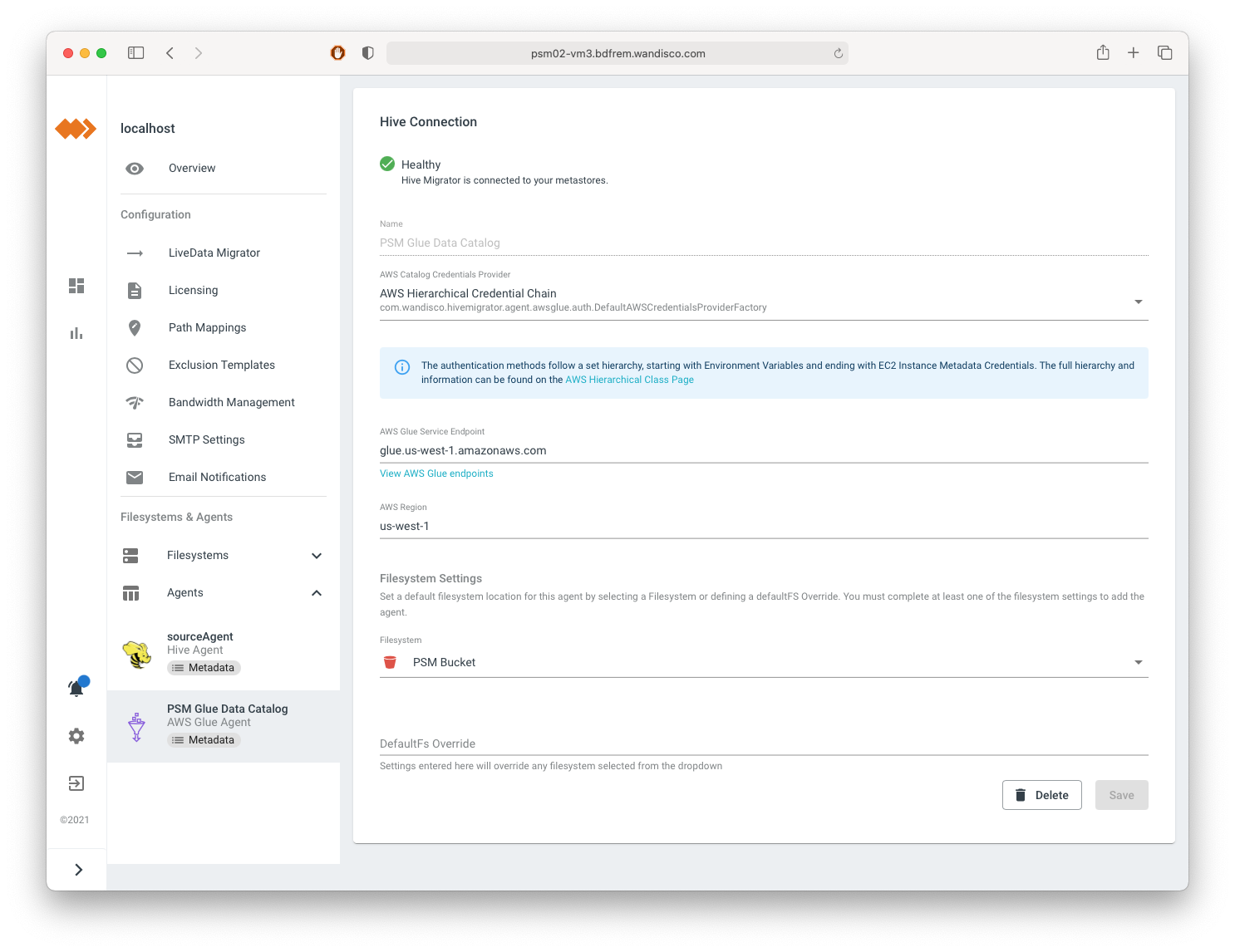

- Define your target Amazon S3 bucket used for table content and your AWS Glue Data Catalog for metadata.

- Select the source databases and tables that you want to migrate and start the migration.

Once the initial migration completes, LiveData Migrator will continue to replicate as new metadata is created or updated at the source. Once both data and metadata are on AWS, you can use AWS Lake Formation to define and enforce fine-grained access controls. Integrated analytics services such as Amazon EMR running Apache Hive, Spark and Presto, Amazon Redshift and Amazon Athena can also query the data with no additional configuration.

Modern data teams benefit from incremental migration strategies

As with any migration, having a plan will guide the effort and ensure success. But not all plans lead to business benefits. There are fundamentally two migration strategies: a one-off “Big Bang” migration or an incremental migration that can take place over time. Big bang migration approaches come with high risk of failure, high costs, and significant impacts to business operations. An ideal migration solution should utilize an incremental strategy that does not disrupt source analytics and business systems or impose significant extra load on source systems during migration.

Incremental migrations provide a gradual migration of system components over time in a way that allows them to be used throughout the process - potentially with some in their original state and some in their target state - without degrading overall system availability and functionality. Those components do not all need to move to the target state at the same time for the system as a whole to be functional. An incremental migration strategy should also include both data and metadata.

Accelerate time-to-value for analytics platforms

Companies can easily and quickly migrate the two most important aspects of the platform - data and metadata - with WANdisco LiveData Migrator. Continuous replication of data and metadata allows companies to maintain normal business operations on-premises while shortening the time it takes to capture benefits of AWS native analytics and machine learning services. This enables bursting time sensitive workloads to the cloud, supporting new use cases, and building new products that improve customer experience and increase top-line revenues.

Get started with a quick test drive

Get started with a quick test drive See how easy it is to migrate to AWS Glue Data Catalog with a free trial of WANdisco LiveData Migrator. Use it for selectively migrating data and metadata without business disruption, even while those datasets continue to change.

Paul Scott-Murphy

Paul Scott-Murphy

Chief Technology Officer, WANdisco

Paul has overall responsibility for WANdisco’s product strategy, including the delivery of product to market and its success. This includes directing the product management team, product strategy, requirements definitions, feature management and prioritisation, roadmaps, coordination of product releases with customer and partner requirements and testing. Previously Regional Chief Technology Officer for TIBCO Software in Asia Pacific and Japan. Paul has a Bachelor of Science with first class honours and a Bachelor of Engineering with first class honours from the University of Western Australia.