The Data Migration Gap

By Tony Velcich

Oct 05, 2020

Cloud Adoption and Data Architecture Modernization

Cloud adoption has seen significant increases over the past decade. Much of that growth has been with the adoption of Software-as-a-Service (SaaS) applications. For example, in 2008 only 12% of businesses used cloud-based CRM and today that number is 87%.1 While SaaS applications still account for the majority of cloud service revenue, Gartner estimates that the Compound Annual Growth Rates (CAGRs) for Platform-as-a-Service (PaaS) 20.2%, and Infrastructure-as-a-Service (IaaS) 15.3%, will be greater than the expected 12.4% CAGR for SaaS.2

The key driver for this growth is data. In a survey of 304 IT executives Dimensional Research found that 100% of those surveyed are modernizing their technology architecture, with the top goals of the modernization being to reduce costs, improve customer and employee satisfaction, and gain data-driven insights. In addition, 85% of respondents indicated that cloud initiatives were part of their modernization efforts.3

The Data Migration Gap

While organizations are benefiting from the performance, flexibility, and cost savings offered by the cloud, many enterprises have struggled with their data migration initiatives. Cloud data migration can be fraught with business risks including disruption of critical business operations, risk of data loss, and overall project complexities that often result in cost overruns or failed initiatives. According to Gartner, “Through 2022, more than 50% of data migration initiatives will exceed their budget and timeline—and potentially harm the business—because of flawed strategy and execution,”4

These issues are particularly present when using legacy data migration approaches and is what we at WANdisco call the “data migration gap”. How can organizations migrate their petabytes of business critical and actively changing customer data without causing business disruption and minimizing the time, costs, and risks associated with legacy data migration approaches?

Legacy Data Migration Approaches

Legacy data migration approaches typically fall under the following categories.

Lift and Shift

A lift and shift approach is used to migrate applications and data from one environment to another with zero or minimal changes. This approach is probably the most common, as companies perceive it to be straight forward. However, it is simplistic and risk-laden because of the assumption that no changes are needed. As a result, the majority of these projects fail. Instead of gaining the efficiencies promised by the cloud, they don’t take advantage of new capabilities available to them and end up transferring shortcomings from their existing implementation to the new cloud environment.

Summary of issues:

- Simplistic and risk-laden approach

- Results in failed projects

- Requires downtime during migration to prevent data changes from occurring

- All applications must be cut-over at one time requiring a “big-bang” approach

- Does not take advantage of new capabilities available in the cloud environment

- Often results in unexpected costs and performance issues

Incremental Copy

An incremental copy approach is where new and modified data are periodically copied from the source to target environment utilizing multiple passes of the source data. The approach requires that all original data from the source system have been migrated to the target and incremental changes to the data are processed with each subsequent pass. The issue with this approach is that if there is a large volume of data with changes occurring it may be impossible to ever catch up and complete the migration without requiring downtime.

Summary of issues:

- Requires multiple passes of the source data

- May be impossible to catch up with all data changes

- May require application downtime

- Typically, only supports small data volumes

Dual Pipeline / Ingest

A dual pipeline or dual ingest approach is where new data is ingested simultaneously into both the source and target environments. This approach requires significant effort to develop, test, operate and maintain the multiple pipelines. It requires that all applications are modified to always update both source and target environments when performing any data changes.

Summary of issues:

- Increased development, test, and maintenance efforts

- Impacts on application complexity and performance

- Does not address the initial migration of source data

LiveData Migration Strategy

WANdisco’s LiveData strategy solves all of the challenges associated with the legacy data migration approaches. A LiveData strategy enables migrations to be performed without requiring any application changes or business disruption even while data sets are under active change. WANdisco’s LiveData capabilities perform migrations of any scale with a single pass of the source data, while supporting continuous replication of ongoing changes from source to target. Furthermore, WANdisco’s patented Distributed Coordination Engine enables active-active replication to support true hybrid cloud or multi-cloud implementations and ensure 100% data consistency across a distributed environment.

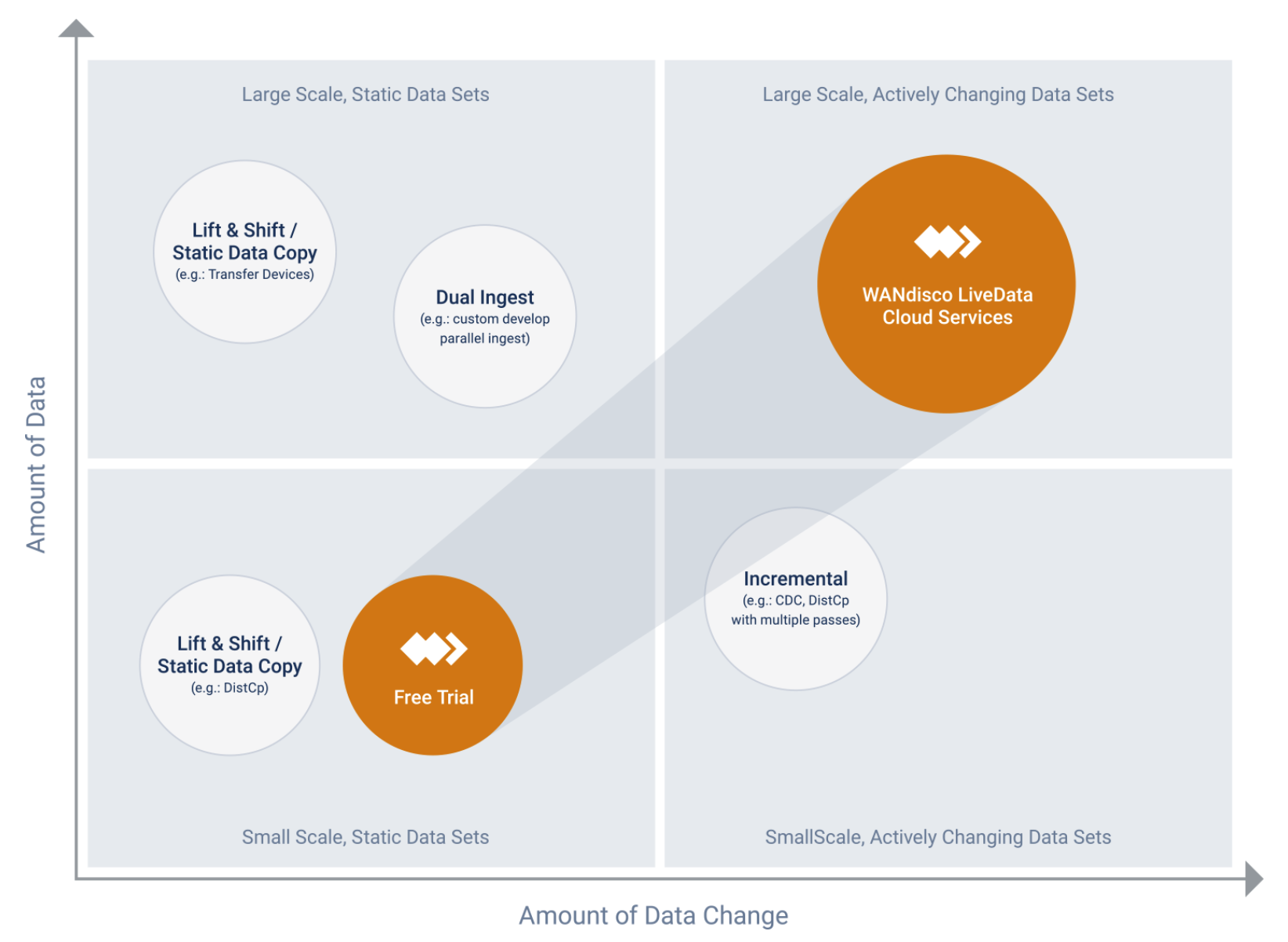

WANdisco’s LiveData approach is able to support data migration and replication use cases regardless of the data volumes or amount of data change occurring, and is the only approachable to cost-effectively manage large scale data migrations even with large amounts of changes occurring.

WANdisco also offers a Free Trial version of LiveData Migrator, which enables organizations to migrate 5TB of data for free with zero risks, and scale that to the largest data volumes thereby enabling WANdisco to provide the optimal solution for any points in between as well.

References:

1 SuperOffice, “18 CRM Statistics You Need to Know for 2020 (and Beyond)” By Mark Taylor, September 17, 2020

2 Enterprise Irregulars, “Public Cloud Soaring To $331B By 2022 According To Gartner” By Louis Columbus, April 14, 2019

3 Dimensional Research, “2019 IT Architecture Modernization Trends—A Survey of IT Exectutives at Large Enterprises” By Dimensional Research, July 2019

4 Gartner, “Make Data Migration Boring: 10 Steps to Ensure On-Time, High-Quality Delivery” By Ted Friedman, December 13, 2019

About the author

Tony Velcich, SR. DIRECTOR OF PRODUCT MARKETING, WANDISCO

Tony is an accomplished product management and marketing leader with over 25 years of experience in the software industry. Tony is currently responsible for product marketing at WANdisco, helping to drive go-to-market strategy, content and activities. Tony has a strong background in data management having worked at leading database companies including Oracle, Informix and TimesTen where he led strategy for areas such as big data analytics for the telecommunications industry, sales force automation, as well as sales and customer experience analytics.